Bilinear Policy Networks, Forked Agents, and PeSFA

The field of reinforcement learning (RL) has seen remarkable advancements, with deep reinforcement learning (DRL) leading the charge in training autonomous agents for complex tasks. Despite these achievements, a persistent challenge remains: enabling agents to generalize effectively across varied environments and tasks. This capability is crucial for applications in robotics, autonomous driving, and other domains requiring adaptability to dynamic conditions. Recent studies introduce novel approaches to tackle this issue, including the use of Bilinear Policy Networks, Forked Agents, and Policy-Extended Successor Feature Approximators (PeSFA). This article delves into these innovative techniques, exploring how they enhance generalization and efficiency in DRL, and their implications for future engineering applications.

Bilinear Policy Networks: Enhancing Representation Learning

One critical challenge in DRL is the ability of agents to generalize across different environments. Traditional policy networks often struggle to capture subtle differences between similar states, limiting their generalization capabilities. A recent study addresses this issue by introducing a bilinear policy network designed to enhance representation learning.

- The Generalization Challenge

Typically, DRL agents optimize a policy network based on known environments. However, these agents often fail when exposed to new, unseen environments due to their inability to learn representations that effectively differentiate between similar states. This limitation is particularly problematic in fields like robotics and autonomous driving, where minor variations in the environment can significantly impact performance.

- The Bilinear Policy Network Approach

The bilinear policy network integrates two feature extractors that interact pairwise in a translationally invariant manner. This bilinear structure allows the network to recognize and differentiate subtle variations among highly similar states, thereby improving the agent’s ability to generalize.

Adopting a bilinear policy network can enhance the reliability and efficiency of autonomous systems. This approach can improve navigation and manipulation capabilities in unpredictable environments.

Forked Agents: Creating an Ecosystem for Enhanced Generalization

Another promising approach to improving generalization in RL is by improving generalization in reinforcement learning through forked agents. This method involves creating an ecosystem of RL agents, each with a specialized policy tailored to specific subsets of environments.

- The Ecosystem of Forked Agents

The core idea is to move away from a single-policy model and instead develop multiple agents, each fine-tuned for different environments. This ecosystem model acknowledges that no single policy can handle all scenarios effectively.

- Initialization Techniques and Empirical Evaluation

A critical aspect of this approach is the initialization of new agents when encountering unfamiliar environments. The study explores various initialization techniques inspired by deep neural network initialization and transfer learning, providing new agents with a robust starting point to adapt quickly to new scenarios.

Empirical studies demonstrated that forked agents significantly enhance adaptation speed and effectiveness. In tests with procedurally generated environments, these agents exhibited improved generalization compared to traditional RL models, particularly in dynamically changing conditions.

The ecosystem of forked agents offers practical strategies to improve the robustness and adaptability of RL models. This approach can deploy a fleet of robots, each specialized for different tasks or conditions, ensuring continuous adaptation and improvement. In autonomous driving, an ecosystem of agents could help vehicles adapt to diverse driving conditions, improving overall safety and performance.

Policy-Extended Successor Feature Approximator (PeSFA): Improving Efficiency

Generalization in DRL involves applying learned behaviors to new tasks or environments. Traditional DRL approaches often overfit to specific training conditions, limiting their effectiveness in new scenarios. Successor Features (SFs) offer a solution by decoupling the environment’s dynamics from the reward structure, but they rely heavily on the learned policy, which may not be optimal for other tasks.

- PeSFA: A Novel Framework

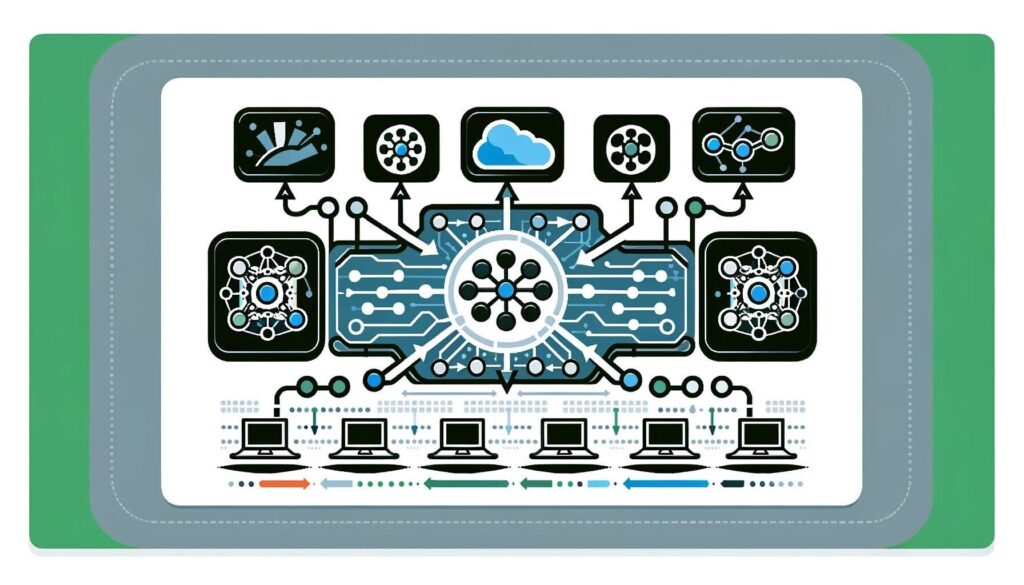

PeSFA enhances generalization by decoupling SFs from the policy and introducing a policy representation module. This module learns a representation of the policy space, allowing the system to generalize across different tasks by searching for suitable policy representations.

- Empirical Validation and Applications

Experiments in representative environments demonstrated that PeSFA significantly improves learning speed and generalization. In navigation and robotic manipulation tasks, PeSFA enabled agents to transfer knowledge from previous tasks to new challenges, reducing the time and interactions needed to learn effective policies.

The Policy-Extended Successor Feature Approximator (PeSFA) is a cutting-edge approach designed to enhance the efficiency and generalization capabilities of deep reinforcement learning (DRL) systems. Let’s look at a detailed explanation of how PeSFA works and its implications for various applications.

The Generalization Challenge in DRL

Generalization in DRL is the ability of an agent to apply learned behaviors to new, unseen tasks or environments. Traditional DRL models often struggle with generalization because they tend to overfit to the specific conditions encountered during training. As a result, these agents can perform well in familiar settings but fail when faced with new, slightly different scenarios.

Successor Features (SFs) offer a promising solution to this challenge by decoupling the environment’s dynamics from the reward structure. This separation allows agents to transfer knowledge more effectively between tasks with similar dynamics but different rewards. However, traditional SFs depend heavily on the policy learned during training, which might not be optimal for other tasks. This reliance can limit the agent’s ability to generalize effectively.

PeSFA introduces a novel approach to enhance the generalization and efficiency of SFs by decoupling them from the learned policy. This is achieved through the following components:

- Policy Representation Module:

PeSFA incorporates a policy representation module that learns a comprehensive representation of the policy space. This module abstracts the essential features of the policies, allowing the system to understand and represent the policy landscape more effectively.

- Decoupling SFs from the Policy:

By using the policy representation as an input to the Successor Features, PeSFA allows the agent to generalize across different tasks more efficiently. This decoupling means that the SFs are no longer tightly bound to a specific policy learned during training, enhancing the agent’s adaptability.

- Efficient Adaptation to New Tasks:

With the policy representation module in place, the DRL agent can quickly search through the policy space to find the most suitable policy representation for new tasks. This ability significantly improves the efficiency of learning and adaptation, as the agent can leverage prior knowledge to accelerate the learning process in new environments.

PeSFA can enhance the efficiency and flexibility of robots performing diverse tasks, such as assembly, maintenance, and exploration. By enabling robots to generalize across different tasks without extensive retraining, development time and costs can be significantly reduced.

In navigation tasks, PeSFA-enabled agents could transfer knowledge from previously learned tasks to new navigation challenges. This transferability reduced the time and interactions needed to learn effective policies for new environments.

In robotic manipulation tasks, agents using PeSFA quickly adapted to different objects and manipulation goals. This adaptability showcased the robustness and efficiency of the PeSFA approach in dynamic and unpredictable settings.

In autonomous driving, PeSFA can help vehicles adapt to various driving conditions and scenarios, improving safety and performance. This framework allows autonomous systems to handle a broader range of situations with greater reliability, from urban streets to rural roads.

In industrial automation, PeSFA can improve the adaptability of machines to different manufacturing processes and product lines. This adaptability ensures that machines can efficiently handle changes in production requirements, enhancing overall productivity.

Towards More Robust and Adaptable Autonomous Systems

The introduction of Bilinear Policy Networks, Forked Agents, and PeSFA represents significant advancements in improving generalization and efficiency in reinforcement learning. These approaches offer practical strategies for developing autonomous systems that are more robust and adaptable to diverse and dynamic environments. Engineers and researchers are encouraged to explore and implement these findings to enhance the performance and reliability of their autonomous systems, paving the way for more intelligent and versatile AI agents in the future.